Nathan Louis

Contact info:

About Me

Currently, I am a EECS Ph.D candidate at the University of Michigan, Ann Arbor. I'm advised by Dr. Jason Corso and my primary research area is Computer Vision, particularly video understanding.

While video understanding encompasses various tasks and problems within the video domain, my research interests are generic object tracking, video object detection, human pose estimation, human pose tracking. My previous efforts so far comprise of video object grounding and single target object tracking by learning motion models from Kalman filters.

I completed my B.S in Electrical Engineering at Kennesaw State University in July 2017. I completed my Master's requirements at the University of Michigan in May 2019.

Publications

- N Louis, L Zhou, SJ Yule, RD Dias, M Manojlovich, FD Pagani, and JJ Corso. "Temporally Guided Articulated Hand Pose Tracking in Surgical Videos". arXiv preprint 2021. Paper

- MR Ganesh, E Hofesmann, N Louis, and JJ Corso. "ViP: Video Platform for PyTorch", arXiv preprint 2019. Paper. Code

- L Zhou, N Louis, and JJ Corso. "Weakly-Supervised Video Object Grounding from Text by Loss Weighting and Object Interaction", BMVC 2018. Paper. Code

Projects

Explorative 3D Reconstruction

As part of a project in a special topics course. In this work, we explore the problem of multi-view 3D mesh reconstruction with a limited set of viewpoints. Analogous to an intelligent agent, we learn to select the next best view by predicting the regions of high uncertainty using low-cost silhouette reconstruction from a set of canonical viewpoints. [PDF] [Code]

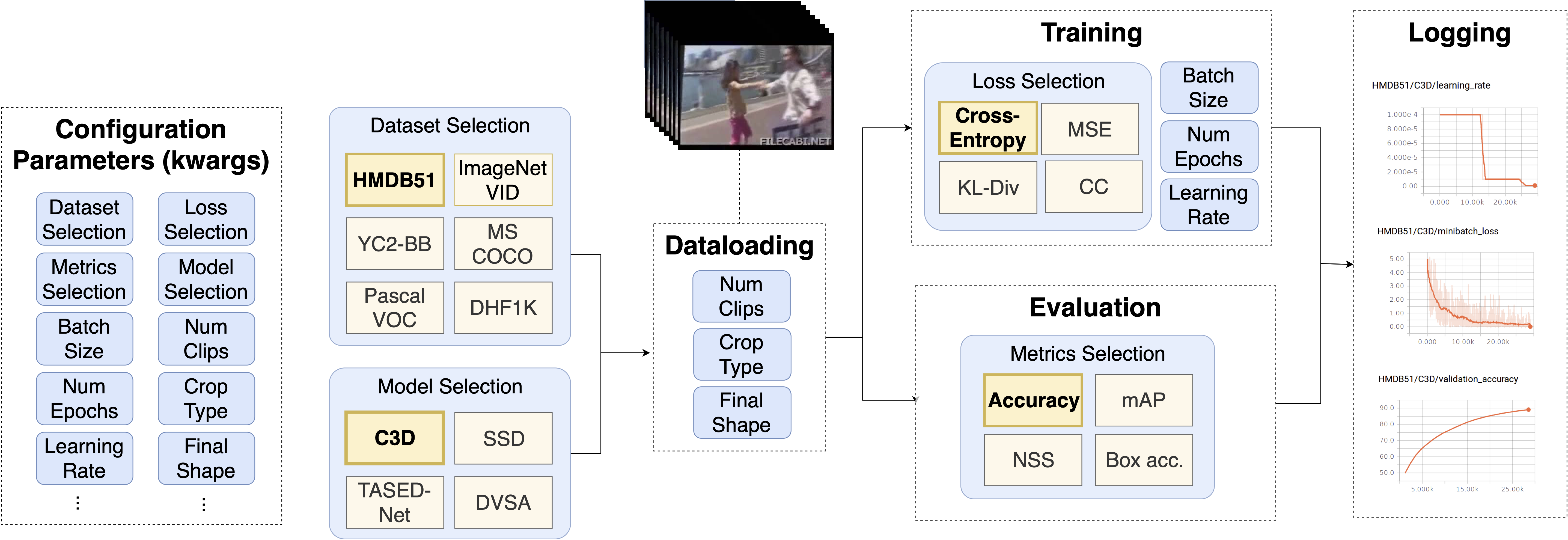

ViP: Video Platform for PyTorch

For this work, we developed a deep learning-based framework we call the Video Platform for PyTorch (ViP). We design it as a way to prototype and benchmark computer vision models in the video domain. ViP is built with flexibility and modularity in mind allowing for a single unified interface applicable to all video problem domains, easily reproducible experimental setups, and rapid prototyping of video models. [PDF] [Code]

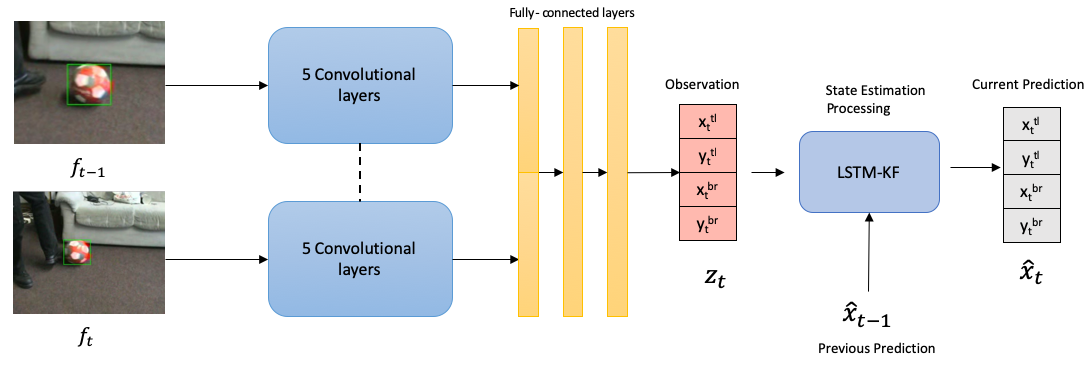

Learning Motion Models for Robust Visual Object Tracking

This is from my qualification exams project regarding visual object tracking in computer vision. I investigated using state estimation theory in combination with a deep learning framework to produce robust tracking coordinate positions. I used a Siamese CNN to encode my observations followed by a recurrent neural network that can approximate a motion model and covariance estimates for Kalman filter updates.[PDF]

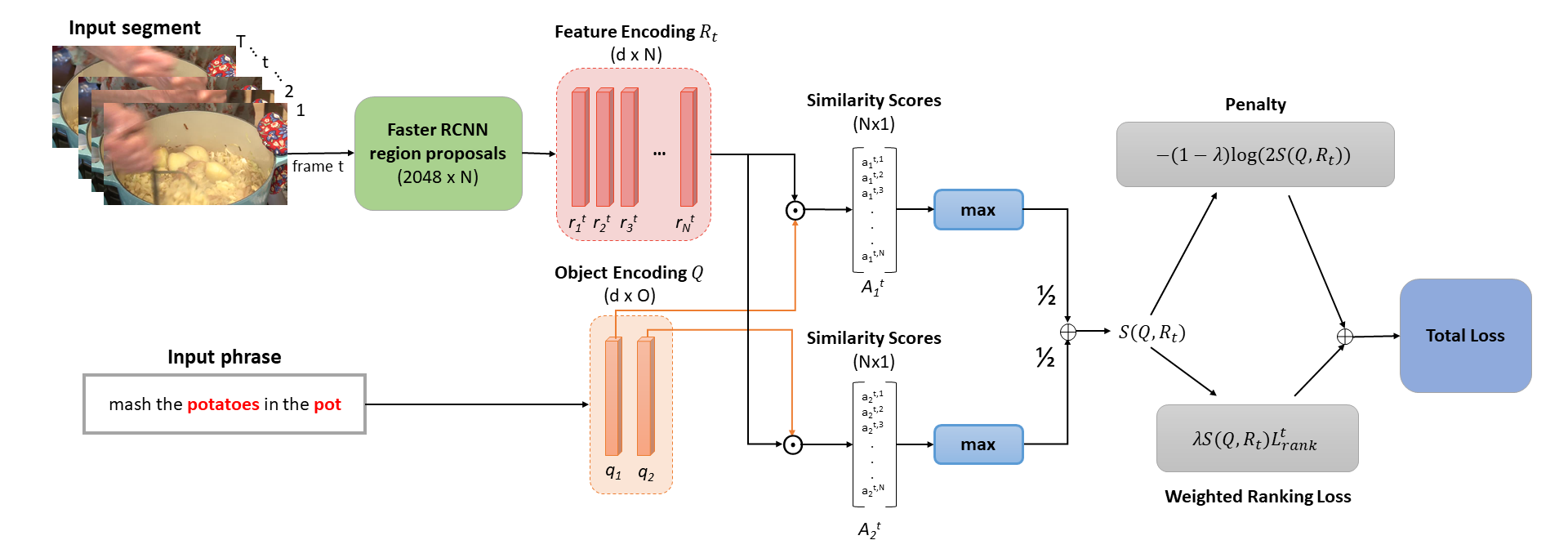

Weakly-Supervised Video Object Grounding from Text by Loss Weighting and Object Interaction

We studied weakly-supervised video object grounding: given a video segment and a corresponding descriptive sentence, the goal is to localize objects that are mentioned from the sentence in the video. Our model is evaluated on the newly- collected benchmark YouCook2-BoundingBox and show improvements over competitive baselines. [PDF] [Code]